Supported Platforms

WhereScape + Databricks

Integrate WhereScape’s automation with Databricks’ Medallion Architecture for streamlined pipelines, real-time analytics and up to 95% less manual coding.

Benefits of Seamless Integration

WhereScape and Databricks deliver a unified solution that automates your data lake development. Leverage Delta Lake for storage and Delta Live Tables for streaming pipelines. Enforce ACID transactions and benefit from real-time insights, capable auto-scaling and a high standard of robust data governance.

AUTOMATE

Automated Pipeline

WhereScape RED automates your data pipelines in Databricks by generating ETL code for Delta Live Tables and Databricks notebook generation. Enjoy 8x faster deployment cycles & minimal manual coding.

- Automated ETL code generation.

- 95% reduction in manual coding.

- Delta Live Tables integration.

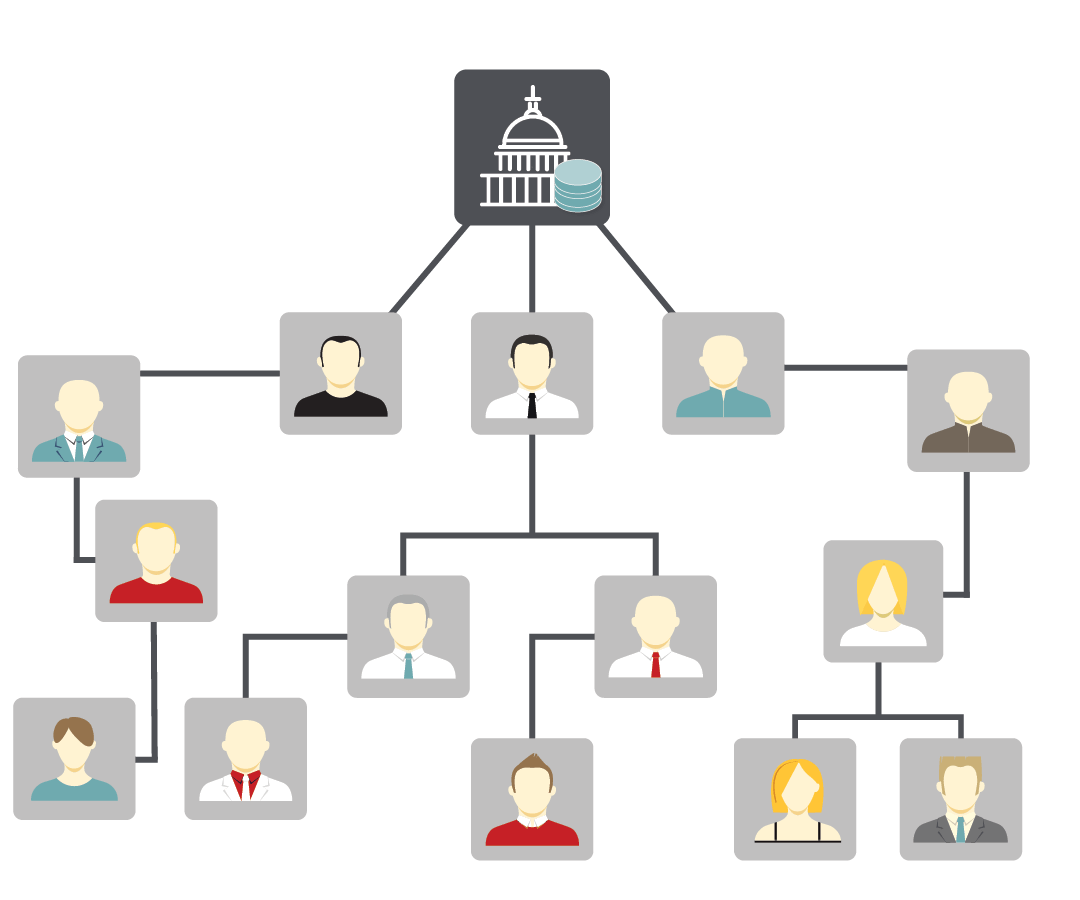

STREAMLINE

Medallion Data Layers

Leverage Databrick’s Medallion Architecture to streamline ingestion across your Data Lake’s Bronze (raw), Silver (cleansed via Delta Lake) and Gold (curated) layers for analytics.

- Bronze layer – raw data capture.

- Silver layer – cleansing & merging.

- Gold layer – curated analytics.

COMPLIANT

Enhanced Governance

Integrate data governance with Databricks Unity Catalog for full data lineage and secure access controls. Ensure trust and compliance – with automated audit trails and governance throughout your data workflows.

- Unified data lineage tracking.

- ACID compliant transactions.

- Versatile access control.

Additional Features

Dive Deeper into WhereScape and Databricks

Azkaban Scheduling

Improved job management with Azkaban ensures reliable scheduling, reducing your downtime while boosting your throughput.

CLI & API Enhancements

Expanded CLI and API support simplifies integration and automation across your entire data workflows.

3D Modeling Integration

Seamlessly export models from WhereScape 3D to RED, accelerating your design and deployment processes.

Automated Documentation

Auto-generated and up-to-date documentation ensures complete data lineage and eases governance.

Data Vault Methodology

Leverage Data Vault 2.0 standards for agile, scalable and sturdy data warehouse solutions.

Deployment Efficiency

Significantly reduce deployment timelines and costs by embedding best practices and automation into your workflows.

Discover: Seamless Databricks Integration

Begin your journey to modernize your data infrastructure, with the combined power of Databricks paired with WhereScape.