Join our fast-and-friendly virtual tour to...

ETL vs ELT: What are the Differences?

In working with hundreds of data teams through WhereScape’s automation platform, we’ve seen this debate evolve as businesses modernize their infrastructure. Each method, ETL vs ELT, offers a unique pathway for transferring raw data into a warehouse, where it can be transformed into valuable insights. This distinction is critical in choosing the right data processing method as it directly influences efficiency, scalability, and the speed at which data becomes actionable.

Drawing from insights working alongside data teams and WhereScapes 1,200+ customers across industries, this blog aims to clarify the distinctions between ETL vs ELT, offering insights into the suitability of each approach for varying organizational needs. It also touches on the emerging trends of cloud-based data management and how automation tools like WhereScape are revolutionizing these data integration processes.

What is ETL?

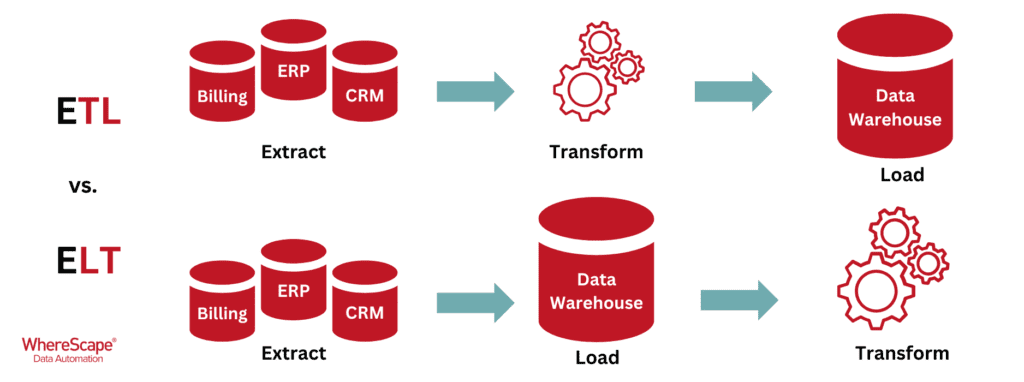

ETL, short for Extract, Transform, and Load, is a cornerstone of data integration strategies. This process involves extracting data from diverse sources, transforming it into a structured and consistent format, and finally, loading it into a data warehouse or repository for analysis. Initially designed to offload data processing from less powerful servers, ETL has evolved significantly.

Modern ETL tools—like those referenced in Gartner’s Market Guide for Data Integration Tools—offer functionalities beyond simple data manipulation, including advanced data cleaning, real-time processing capabilities, and graphical interfaces for workflow management. These tools are essential in preparing data for analysis, ensuring data quality and consistency, and supporting business intelligence initiatives. With the advent of cloud computing, ETL processes have become more scalable and cost-effective, further enhancing their importance in data-driven decision-making and analytics.

At WhereScape, we’ve helped enterprises design thousands of ETL pipelines, often used in finance and education sectors where data quality is non-negotiable.

What is ELT?

ELT, short for Extract, Load, and Transform, revolutionizes data integration by leveraging the computational power of modern data warehouses. Unlike traditional ETL, ELT processes extract data from various sources and load it directly into a data warehouse, where transformation occurs.

This approach utilizes the data warehouse’s MPP (Massive Parallel Processing) capabilities—found in leading cloud platforms like Snowflake, Redshift, and Azure Synapse—to manage data at scale.

This transition to ELT not only streamlines data workflows but also enhances the management of large-scale data projects. It accommodates a diverse array of data types, including both structured data (such as spreadsheets and databases) and unstructured data (like text documents and social media posts), providing the versatility needed for comprehensive data analysis. ELT facilitates agile data exploration, allowing users to access and interrogate both raw and processed data within the warehouse, which supports dynamic and iterative analysis.

In fact, many WhereScape customers have moved to ELT pipelines during cloud migrations or platform transitions, especially in industries like manufacturing and healthcare. One European mining company used WhereScape to integrate diverse systems into a centralized Snowflake environment, allowing them to streamline reporting and standardize how data was handled across departments. In healthcare, a nonprofit organization facing rapid growth adopted WhereScape RED and 3D to build a Data Vault, replacing manual processes and improving overall data quality and delivery speed.

With the progression of cloud computing technologies, ELT has evolved to become more efficient and cost-effective, positioning it as a crucial element of contemporary data strategies and analytics.

ELT vs. ETL

When comparing ELT vs ETL, we’re evaluating two distinct methodologies for preparing data for analysis. The sequence spelled out by their acronyms offers clues to their differing procedures.

With ELT, raw data is initially extracted from its sources and then loaded straight into the data warehouse. The transformation of this raw data set occurs only after it’s been placed in the warehouse. This strategy utilizes the powerful computational abilities of modern data warehouses, capable of efficiently managing complex transformations on large volumes of data thanks to their massive parallel processing (MPP) capabilities.

Conversely, ETL follows a different approach to raw data. After extraction, the raw data is transformed into a cleaner, more organized format outside the data warehouse, often in a data lake or a separate staging area. This stage is where the data is cleaned, structured, and possibly enriched, preparing it for loading into the warehouse. The ETL process, therefore, is more resource-intensive upfront, ensuring that only well-organized and refined data sets enter the data warehouse, ready for analysis.

For instance, a healthcare provider might prefer ETL to ensure PHI is cleaned and validated before storage, while a media company might use ELT for faster, large-scale content analytics.

Key Differences Between ELT vs ETL: Transformation Location and Process

Here is a quick comparison of the key distinctions:

This table reflects real-world differences seen across WhereScape customers in healthcare, retail, and financial services.

The key difference between ELT and ETL strategies primarily lies in the location and timing of the data transformation process. ELT automation places the responsibility for transforming data in the data warehouse after the data has been loaded. This approach is particularly advantageous for handling large-scale data applications, as it can significantly speed up the process and enhance scalability. By conducting transformations within the data warehouse, ELT simplifies the data pipeline, removing the necessity for a separate staging area. This is especially beneficial when dealing with a wide array of data sources, including real-time data streams, as it enables immediate processing and analysis within the warehouse environment.

On the other hand, ETL provides a higher level of control over the transformation process by performing it before loading the data into the warehouse. This pre-processing stage is critical for ensuring the quality and consistency of sensitive data, as it allows for thorough cleansing and validation to maintain data accuracy—a vital consideration in environments where the integrity of data is paramount.

ELT’s ability to leverage the processing power and scalability of modern data warehouses makes it an ideal choice for sophisticated data transformations, particularly when dealing with diverse and real-time data sources. However, ETL remains the preferred method in scenarios requiring meticulous pre-processing of sensitive data to ensure its quality and structure before it enters the data warehouse. This delineation highlights the strategic considerations businesses must evaluate when choosing between ELT and ETL, depending on their specific needs for data management, real-time processing capabilities, and security concerns surrounding sensitive information.

Benefits of ELT for Cloud-Based Data Management

According to a TDWI report, 65% of organizations have adopted ELT to improve speed and scalability in cloud environments. As more organizations migrate to cloud-based data solutions, the advantages of ELT become increasingly apparent, propelling its popularity among data professionals and businesses alike. By enabling data transformation within the data warehouse itself, ELT facilitates a more direct and efficient workflow, allowing for immediate manipulation of data and fostering faster productivity by reducing the time and complexity involved in traditional data preparation tasks.

At WhereScape, we’ve seen data teams reduce time-to-insight by up to 60% using ELT automation and other key benefits, such as:

- Faster Productivity: By allowing data professionals to perform transformations directly inside the data warehouse, ELT streamlines workflows, reducing the time and complexity typically associated with data preparation tasks. This direct manipulation of data speeds up the entire data processing cycle, enabling quicker insights.

- Increased Scalability: Cloud-based data warehouses provide the flexibility to scale data processing and storage resources up or down as required. ELT takes advantage of this feature, ensuring businesses can easily adjust their capacity to match their current needs without significant upfront investments or delays.

- Fewer Errors: ELT simplifies the data pipeline by consolidating data integration processes within the data warehouse, which minimizes the chances of errors that often occur during data transfer between multiple environments. This streamlined architecture leads to higher data accuracy and integrity.

- Cost Savings: By optimizing data processing and storage, ELT helps reduce operational costs. Traditional ETL processes can generate multiple copies of data, leading to unnecessary resource consumption. ELT, in contrast, reduces data redundancy and waste, leading to more efficient resource use.

- Sustainability: ELT’s efficient use of resources contributes to environmental sustainability by minimizing the carbon footprint associated with data management activities. By reducing data redundancy and optimizing processing, ELT supports corporate sustainability goals alongside its operational advantages.

These benefits highlight why ELT is becoming the go-to choice for organizations looking to leverage the full potential of their data in the cloud era.

Enhancing ETL and ELT with WhereScape Data Automation

Both ETL and ELT workflows benefit from automation, particularly when consistency, scalability, and speed are critical. Based on conversations with architects and engineers using RED and 3D daily, WhereScape’s data automation tools support each approach by minimizing manual development, improving pipeline reliability, and enforcing best practices throughout the data warehouse lifecycle.

WhereScape RED is widely used by teams who adopt an ETL approach, offering end-to-end automation of data extraction, transformation, and loading. It enables developers to manage source-to-target mappings, transformation logic, and workflow orchestration without manual scripting. RED generates native code for platforms like SQL Server, Oracle, and Teradata, providing transparency and control while maintaining data quality.

WhereScape 3D supports both ETL and ELT strategies by focusing on discovery, modeling, and documentation. It allows users to reverse-engineer existing environments, perform impact analysis, and generate metadata-driven models that accelerate design and reduce technical debt. In ELT scenarios, 3D helps teams structure transformations to take full advantage of the processing power of platforms like Snowflake, Databricks, Redshift, Microsoft Fabric, Azure Synapse, and more.

Together, RED and 3D help organizations build and maintain a reliable data infrastructure, regardless of the processing method. These tools are in use by over 1,200 organizations worldwide, including enterprises in finance, healthcare, education, and manufacturing sectors where compliance, traceability, and performance are non-negotiable.

By automating repetitive steps and providing visibility into every layer of the data architecture, WhereScape enables teams to scale their data efforts with fewer errors and greater confidence.

Streamline Your ELT Strategy with WhereScape

Deciding on an ELT strategy involves balancing various factors, such as data volume and the need for agile processing.Want to see how top-performing teams across manufacturing, healthcare, and finance automate their ELT strategies?

Request a personalized demo with one of our senior solution architects.

FAQs: ETL vs ELT

A: The main difference lies in the order of operations. ETL (Extract, Transform, Load) processes data before loading it into a data warehouse, while ELT (Extract, Load, Transform) loads raw data first and transforms it within the warehouse.

A: Yes, ELT is generally better suited for modern cloud data warehouses like Snowflake, Redshift, and Azure Synapse due to their ability to handle large-scale transformations with MPP (Massively Parallel Processing).

A: Absolutely. Many organizations use a hybrid model—ETL for sensitive data requiring preprocessing, and ELT for high-volume or real-time data that benefits from in-warehouse transformation.

A: WhereScape RED supports ETL by automating transformation logic before loading. WhereScape 3D supports both ETL and ELT with modeling, reverse engineering, and metadata generation across platforms.

A: As cloud environments become the norm, ELT allows organizations to take advantage of scalable compute power for faster, in-warehouse transformations—reducing redundancy and streamlining data pipelines.

About the Authors

Patrick O’Halloran is a Senior Solutions Architect at WhereScape with over two decades of experience in data warehousing and analytics. He works with global organizations to implement automated data infrastructure using WhereScape RED and 3D, helping teams scale their data operations efficiently and reliably.

Kortney Phillips is the Marketing Manager at WhereScape, specializing in data automation strategies and technical content. She collaborates closely with data architects and engineers to translate complex data processes into accessible, actionable insights.

WhereScape at TDWI Munich: Automate Data Vault on Databricks

WhereScape at TDWI Munich 2025: Automate a Full Data Vault on Databricks in Just 45 Minutes June 24–26, 2025 | MOC Munich, Germany As data complexity grows and business demands accelerate, scalable and governed data architectures are no longer optional—they're...

What Is OLAP? Online Analytical Processing for Fast, Multidimensional Analysis

Streamline your data analysis process with OLAP for better business intelligence. Explore the advantages of Online Analytical Processing (OLAP) now! Do you find it challenging to analyze large volumes of data swiftly? A Forrester study reveals that data teams spend...

Build AI-Ready Data: Visit WhereScape at AI & Big Data Expo

June 4–5, 2025 | Booth 202 | Santa Clara Convention Center As organizations scale their artificial intelligence and analytics capabilities, the demand for timely, accurate, governed, and AI-ready data has become a strategic priority. According to Gartner, through...

Automating Star Schemas in Microsoft Fabric: A Webinar Recap

From Data Discovery to Deployment—All in One Workflow According to Gartner, data professionals dedicate more than half of their time, 56%, to operational tasks, leaving only 22% for strategic work that drives innovation. This imbalance is especially apparent when...

What is a Data Model? How Structured Data Drives AI Success

What is a data model? According to the 2020 State of Data Science report by Anaconda, data scientists spend about 45% of their time on data preparation tasks, including cleaning and loading data. Without well-structured data, even the most advanced AI systems can...

Dimensional Modeling for Machine Learning

Kimball’s dimensional modeling continues to play a critical role in machine learning and data science outcomes, as outlined in the Kimball Group’s 10 Essential Rules of Dimensional Modeling, a framework still widely applied in modern data workflows. In a recent...

Automating Data Vault in Databricks | WhereScape Recap

Automating Data Vault in Databricks can reduce time-to-value by up to 70%—and that’s why we hosted a recent WhereScape webinar to show exactly how. At WhereScape, modern data teams shouldn't have to choose between agility and governance. That's why we hosted a live...

WhereScape Recap: Highlights From Big Data & AI World London 2025

Big Data & AI World London 2025 brought together thousands of data and AI professionals at ExCeL London—and WhereScape was right in the middle of the action. With automation taking center stage across the industry, it was no surprise that our booth and sessions...

Why WhereScape is the Leading Solution for Healthcare Data Automation

Optimizing Healthcare Data Management with Automation Healthcare organizations manage vast amounts of medical data across EHR systems, billing platforms, clinical research, and operational analytics. However, healthcare data integration remains a challenge due to...

WhereScape Q&A: Your Top Questions Answered on Data Vault and Databricks

During our latest WhereScape webinar, attendees had fantastic questions about Data Vault 2.0, Databricks, and metadata automation. We’ve compiled the best questions and answers to help you understand how WhereScape streamlines data modeling, automation, and...

Related Content

WhereScape at TDWI Munich: Automate Data Vault on Databricks

WhereScape at TDWI Munich 2025: Automate a Full Data Vault on Databricks in Just 45 Minutes June 24–26, 2025 | MOC Munich, Germany As data complexity grows and business demands accelerate, scalable and governed data architectures are no longer optional—they're...

What Is OLAP? Online Analytical Processing for Fast, Multidimensional Analysis

Streamline your data analysis process with OLAP for better business intelligence. Explore the advantages of Online Analytical Processing (OLAP) now! Do you find it challenging to analyze large volumes of data swiftly? A Forrester study reveals that data teams spend...

Build AI-Ready Data: Visit WhereScape at AI & Big Data Expo

June 4–5, 2025 | Booth 202 | Santa Clara Convention Center As organizations scale their artificial intelligence and analytics capabilities, the demand for timely, accurate, governed, and AI-ready data has become a strategic priority. According to Gartner, through...

Automating Star Schemas in Microsoft Fabric: A Webinar Recap

From Data Discovery to Deployment—All in One Workflow According to Gartner, data professionals dedicate more than half of their time, 56%, to operational tasks, leaving only 22% for strategic work that drives innovation. This imbalance is especially apparent when...